AI is taking over… but what about the ethics?

As NASA’s Voyager 2 Probe enters Interstellar Space, tentatively opening a window to what lies beyond our solar system, back home on Earth another technology-enabled adventure is underway; our fourth industrial revolution, or Industry 4.0.

Self-driving cars ‘talk’ to ‘intelligent’ traffic lights that can perceive and protect road users. Zero emission energy grids draw on startling new materials and processes. And robotics provide replacements for human ears, eyes and limbs — just to name a few.

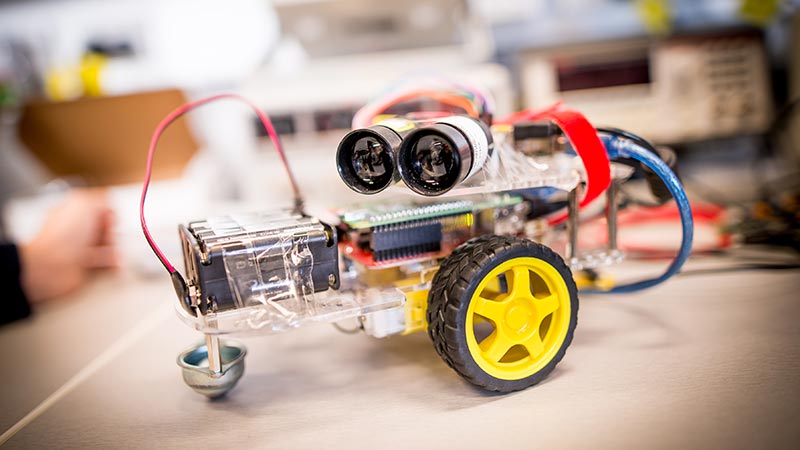

An autonomous robot used for testing as part of Melbourne Information, Decision and Autonomous Systems (MIDAS)

Essential to the research, delivery and performance of Industry 4.0 is the digital power duo: Big Data and Artificial Intelligence (AI).

With AI comes the ability to embed into almost every human activity the real-time sorting of enormous amounts of interacting data.

This AI-driven sorting also brings the ability to detect complex patterns in data to support near instantaneous decision-making. Put another way, AI makes it possible to think quickly and vastly.

In one sense, AI is a response to the idea that we have somewhat already solved everything we can with a single engineering discipline and simple mathematical rules.

AI is the next-generation software that cuts time consuming processing to such an extent that questions now arise as to whether human thought will be able to match this super processing capability

Yet challenges remain — some a result of prior industrial revolutions. And the challenges are complex, often lacking any simple higher-order rules to draw upon.

AI is the next-generation software that cuts time consuming processing to such an extent that questions now arise as to whether human thought will be able to match this super processing capability.

We are seeing in early technologies such as facial recognition and automated debt collecting (as in the case of Australia's Robodebt system) that AI has been granted authority to make important decisions about people.

Biometric Mirror highlights some of the ethical risks in AI-dependent facial scanning technology

So along with AI comes AI ethics, a hot topic at the World Engineering Convention (WEC) held in Melbourne during November 2019.

Greg Adamson, Associate Professor and Enterprise Fellow in Cybersecurity at Melbourne School of Engineering, took up the issue at the Convention with a proactive stance.

He reported that the Institute of Electrical and Electronics Engineers (IEEE), a global professional organisation with 420,000 members across 160 countries, had crowd-sourced ideas to produce a comprehensive dissertation on ethics.

This has been published as AI: Ethically Aligned Design: A Vision for Prioritising Human Well-Being with Autonomous and Intelligent Systems, First Edition.

“Over 3000 people from all over the world, 40 per cent women, were involved in its creation,” Associate Professor Adamson said.

The development of ethical practices is critically important in addressing technology challenges as the world becomes increasingly and inseparably linked to Autonomous and Intelligent Systems

The IEEE strategy is to put the profession onto the front foot, fully prepared for the debate to come.

To create the paper, contributing engineers derived a set of human-centric principals impacted by AI and then codified protections in the form of a new set of engineering standards to advance technology for the benefit of humanity.

The principles prioritised included consideration for universal human values, human rights and well-being, along with the need for data agency, data privacy and protection from algorithmic bias.

The need for transparency, accountability and awareness of potential misuse was also articulated, along with a need for technical dependability and competence on the part of the creators of these intelligent systems.

A show of hands during the conference presentation highlighted the high level of involvement from engineers in general standards-related work. That same familiarity is now being exploited as a basis for an ethical rollout of intelligent systems and their associated AIs.

Read more on Pursuit: The very human language of AI

Associate Professor Adamson extended an invitation to engineers globally to join in these efforts through the IEEE’s P7000™ Standards Working Groups in developing future standards on ethics and AI and in the development of a Standards Certification Program.

Impacts are already apparent. For instance, just a week before WEC, the Australian Government released a set of principles that draws on the IEEE Ethically Aligned Design document.

Several large corporations have put up their hands to adopt these same principles.

“The development of ethical practices is critically important in addressing technology challenges as the world becomes increasingly and inseparably linked to Autonomous and Intelligent Systems,” he said.

“It’s an area that I and the University of Melbourne are very interested in. We are bringing together the best minds to continue advancing this important area.”

For more information visit Cybersecurity at Melbourne School of Engineering.

Related topics