AI for people: User design to enhance human capabilities

AI isn’t just about improving how machines perform; it can improve how we perform, too.

Dr Eduardo Velloso, who is based in the Faculty of Engineering and Information Technology at the University of Melbourne, designs human-centred artificial intelligence systems. He is part of a broader worldwide movement that is embracing more ‘natural’ ways to interact with computers (like voice commands), and also designing systems that can adapt to individuals’ needs.

His work is helping people use voice commands to make more useful notes when reading complex texts and identifying when online learners need help. In the future it could even support disease diagnoses.

Underpinning all these innovations are ‘intelligent feedback loops’; interactive systems that integrate sensors and AI systems to create what Dr Velloso calls “zero interaction scenarios”.

“My work is built on a vision that includes the widespread use of sensors in the environment, that people wear, or they carry,” he says.

Sensors continuously collect data, and AI systems make inferences based on that data. Output devices, such as wearable computers, augmented reality or virtual reality headsets or projections, complete the loop by delivering information in a meaningful way to users.

He sees the role of AI as crucial, to tie the process together, and because human cognition needs something to interpret large volumes of data. Simply put, our brains can’t cope with huge explosions of data on their own.

Practical applications

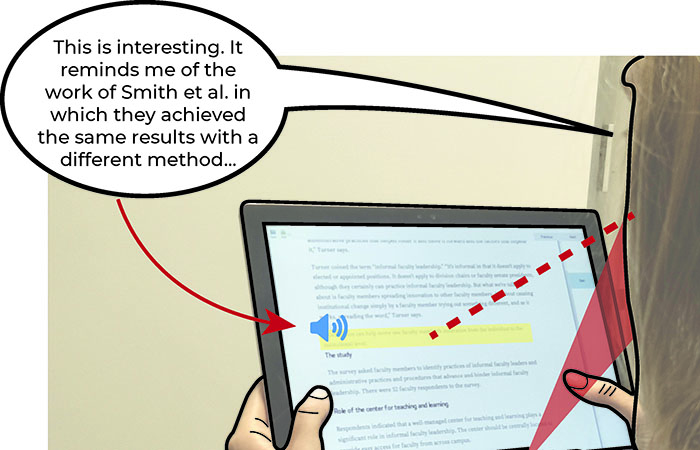

In a recent research project Dr Velloso used eye-trackers to monitor readers’ eye movements in conjunction with a machine learning system that analyses their behaviour, the text content, and the content of voice notes the reader makes.

“You voice your thoughts, and the AI attaches those thoughts as notes to the corresponding segments of text. You don’t have to manually highlight text or write or type. All you need to do is to read and speak, which are natural behaviours,” Dr Velloso explains.

For memorisation tasks, the process provided similar learning outcomes to typed notes. But when it came to more complex tasks, like connecting different concepts, it performed better than typed notes. Dr Velloso says this is because readers made more detailed notes, with more ‘idea units’.

“They connected more concepts and engaged more deeply with the material rather than just summarising it.”

It’s a technology that could be applied to any type of knowledge work, providing feedback to the author of an article or report, for instance, without the reviewer ever needing to touch a keyboard.

Another project Dr Velloso oversees is identifying when students are struggling to understand the content in video lectures, aiming to replicate the observation teachers make when they identify students that need help. It uses thermal cameras to record facial temperatures (with increased blood flow and raised temperatures thought to correlate with increased mental workload), in addition to eye trackers, cameras recording facial expressions and students’ self-reported assessments of their learning.

With the rise of online learning during the COVID-19 pandemic likely to change the way students learn in the longer-term, the technology will have widespread applications.

The right sensor for the job

In any application, the technology depends on finding the right types of sensors, such as thermal cameras, to provide right kind of information.

Depending on the type of data required, sensors might be positioned in a building, a motion capture system, or even on a person to track physiological responses like heart rate.

Ultimately, however, people are very different, even at a physiological level. And even with the ‘perfect algorithm’, it is hard for AI to determine the causes of behaviour, only to observe the consequences.

“But the richer the data, the clearer the picture might be,” says Dr Velloso.

“It’s all part of the puzzle we have to solve.”

Dr Velloso’s research also helps to determine what kind of information users need, and how that can be provided in the most useful way.

As an example, he suggests that an AI system could analyse large patient data sets to make a disease diagnosis. It could then simply provide a diagnosis. But it could also provide the certainty associated with the diagnosis and alternatives diagnoses.

The three outcomes would all be based on the same algorithm but presented in different ways, to help users think differently about next steps.

“My colleagues work on the technical details of explainability and AI systems. Many users won’t need to know the inner workings of an algorithm. But the information it provides, in terms of what matters to users and how the information is presented, is critical,” Dr Velloso says.