AI assurance and trust

Providing independent testing of the accuracy,

robustness and effectiveness of AI platforms.

AI assurance involves technical processes for testing the behaviour of algorithms. These processes are guided by ethical, legal and regulatory frameworks. Such frameworks are being led by the University’s interdisciplinary Centre of AI and Digital Ethics (CAIDE).

Capabilities

- Verification of systems that incorporate AI

- Quality, safety and reliability of AI systems

- Robustness of data collection and AI models

- Resilience of AI systems to attacks by adversaries

- Interpretability of AI algorithms

- Conformance checking of processes incorporating AI

- Competency, privacy guarantees and risks

Sectors

All sectors are looking to perform assurance AI-based systems.

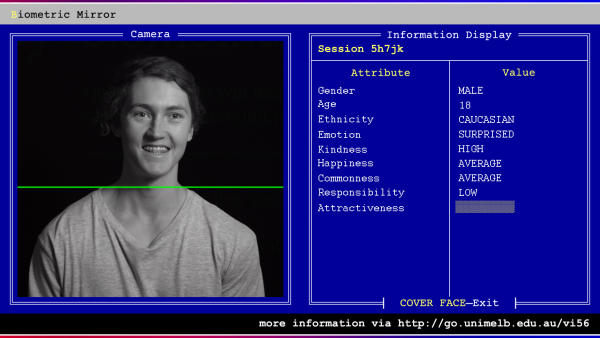

Holding a Black Mirror up to Artificial Intelligence

Biometric Mirror is an interactive application that shows how you may be perceived by others. But it’s flawed and teaches us an important lesson about the ethics of AI.

AI: It’s time for the law to respond

The law is always behind technology but given the sweeping changes heralded by new technologies, legislators need to get in front of the issue.